-

Giraffe Development in 2022

This post is part of the F# Advent Calendar 2021. Many thanks to Sergey Tihon for organising these. Go checkout the other many and excellent posts.

This year, I’ve run out of Xmas themed topics. Instead, I’m just sharing a few tips from a recent project I’ve been working on…

I’m going to show…

- Dev Containers for F# Development

- A simple Giraffe Web Server

- Automated HTTP Tests

- Working with ASP.Net dependencies

You can see the full source code for this project on GitHub here

Dev Containers

Dev Containers are a feature of VS Code I was introduced to earlier this year and have since taken to using in all my projects.

They allow you to have a self contained development environment in DockerFile, including all the dependencies your application requires and extensions for Visual Studio Code.

If you have ever looked at the amount of things you have installed for various projects and wondered where it all came from and if you still need it - Dev Containers solves that problem. They also give you a very simple way to share things with your collaborators, no longer do I need a 10-step installation guide in a Readme file. Once you are setup for Dev Containers, getting going with a project that uses them is easy.

This blog is a GitHub Pages Site, and to develop and test it locally I had to install Ruby and a bunch of Gems, and Installing those on Windows is tricky at best. VS Code comes with some pre-defined Dev Container templates, so I just used the Jekyll one, and now I don’t have to install anything on my PC.

Dev Container for .NET

To get started, you will need WSL2 and the Remote Development Tools pack VS Code extension installed.

Then it just a matter of launching VS Code from in my WSL2 instance:

cd ~/xmas-2021 code .Now in the VS Code Command Palette I select Remote Containers: Add Development Container Configuration Files… A quick search for “F#” helps get the extensions I need installed. In this case I just picked the defaults.

Once the DockerFile was created I changed the

FROMto use the standard .NET format that Microsoft uses (the F# template may have changed by the time you read this) to pull in the latest .NET 6 Bullseye base image.Before

FROM mcr.microsoft.com/vscode/devcontainers/dotnet:0-5.0-focalAfter

# [Choice] .NET version: 6.0, 5.0, 3.1, 6.0-bullseye, 5.0-bullseye, 3.1-bullseye, 6.0-focal, 5.0-focal, 3.1-focal ARG VARIANT=6.0-bullseye FROM mcr.microsoft.com/vscode/devcontainers/dotnet:0-${VARIANT}VS Code will then prompt to Repen in the Dev Container, selecting this will relaunch VS Code and build the docker file. Once complete, we’re good to go.

Creating the Projects

Now that I’m in VS Code, using the Dev Container, I can run

dotnetcommands against the terminal inside VS Code. This is what I’ll be using to create the skeleton of the website:# install the template dotnet new -i "giraffe-template::*" # create the projects dotnet new giraffe -o site dotnet new xunit --language f# -o tests # create the sln dotnet new sln dotnet sln add site/ dotnet sln add tests/ # add the reference from tests -> site cd tests/ dotnet add reference ../site/ cd ..I also update the projects target framework to net6.0 as the templates defaulted to net5.0.

For the

site/I updated to the latest giraffe 6 pre-release (alpha-2 as of now) and removed the reference toPlywhich is no longer needed.That done I could run the site and the tests from inside the dev container:

dotnet run --project site/ dotnet testNext, I’m going to rip out most of the code from the Giraffe template, just to give a simpler site to play with.

Excluding the

open’s it is only a few lines:let demo = text "hello world" let webApp = choose [ GET >=> choose [ route "/" >=> demo ] ] let configureApp (app : IApplicationBuilder) = app.UseGiraffe(webApp) let configureServices (services : IServiceCollection) = services.AddGiraffe() |> ignore [<EntryPoint>] let main args = Host.CreateDefaultBuilder(args) .ConfigureWebHostDefaults( fun webHostBuilder -> webHostBuilder .Configure(configureApp) .ConfigureServices(configureServices) |> ignore) .Build() .Run() 0I could have trimmed it further, but I’m going to use some of the constructs later.

When run you can perform a

curl localhost:5000against the site and get a “hello world” response.Testing

I wanted to try out self-hosted tests against this API, so that I’m performing real HTTP calls and mocking as little as possible.

As Giraffe is based on ASP.NET you can follow the same process as you would for testing as ASP.NET application.

You will need to add the TestHost package to the tests project:

dotnet add package Microsoft.AspNetCore.TestHostYou can then create a basic XUnit test like so:

let createTestHost () = WebHostBuilder() .UseTestServer() .Configure(configureApp) // from the "Site" project .ConfigureServices(configureServices) // from the "Site" project [<Fact>] let ``First test`` () = task { use server = new TestServer(createTestHost()) use msg = new HttpRequestMessage(HttpMethod.Get, "/") use client = server.CreateClient() use! response = client.SendAsync msg let! content = response.Content.ReadAsStringAsync() let expected = "hello test" Assert.Equal(expected, content) }If you

dotnet test, it should fail because the tests expects “hello test” instead of “hello world”. However, you have now invoked your Server from your tests.Dependencies

With this approach you can configure the site’s dependencies how you like, but as an example I’m going to show two different types of dependencies:

- App Settings

- Service Lookup

App Settings

Suppose your site relies on settings from the “appsettings.json” file, but you want to test with a different value.

Let’s add an app settings to the Site first, then we’ll update the tests…

{ "MySite": { "MyValue": "100" } }I’ve removed everything else for the sake of brevity.

We need to make a few minor changes to the

demofunction and also create a new type to represent the settings[<CLIMutable>] type Settings = { MyValue: int } let demo = fun (next : HttpFunc) (ctx : HttpContext) -> let settings = ctx.GetService<IOptions<Settings>>() let greeting = sprintf "hello world %d" settings.Value.MyValue text greeting next ctxAnd we need to update the

configureServicesfunction to load the settings:let serviceProvider = services.BuildServiceProvider() let settings = serviceProvider.GetService<IConfiguration>() services.Configure<Settings>(settings.GetSection("MySite")) |> ignoreIf you run the tests now, you get “hello world 0” returned.

However, if you

dotnet runthe site, and usecurlyou will seehello world 100returned.This proves the configuration is loaded and read, however, it isn’t used by the tests - because the

appsettings.jsonfile isn’t part of the tests. You could copy the file into the tests and that would solve the problem, but if you wanted different values for the tests you could create your own “appsettings.”json” file for the tests{ "MySite": { "MyValue": "3" } }To do that we need function that will load the test configuration, and the add it into the pipeline for creating the TestHost:

let configureAppConfig (app: IConfigurationBuilder) = app.AddJsonFile("appsettings.tests.json") |> ignore () let createTestHost () = WebHostBuilder() .UseTestServer() .ConfigureAppConfiguration(configureAppConfig) // Use the test's config .Configure(configureApp) // from the "Site" project .ConfigureServices(configureServices) // from the "Site" projectNote: you will also need to tell the test project to include the

appsettings.tests.jsonfile.<ItemGroup> <Content Include="appsettings.tests.json" CopyToOutputDirectory="always" /> </ItemGroup>If you would like to use the same value from the config file in your tests you can access it via the test server:

let config = server.Services.GetService(typeof<IConfiguration>) :?> IConfiguration let expectedNumber = config["MySite:MyValue"] |> int let expected = sprintf "hello world %d" expectedNumberServices

In F# it’s nice to keep everything pure and functional, but sooner or later you will realise you need to interact with the outside world, and when testing from the outside like this, you may need to control those things.

Here I’m going to show you the same approach you would use for a C# ASP.NET site - using the built in dependency injection framework.

type IMyService = abstract member GetNumber : unit -> int type RealMyService() = interface IMyService with member _.GetNumber() = 42 let demo = fun (next : HttpFunc) (ctx : HttpContext) -> let settings = ctx.GetService<IOptions<Settings>>() let myService = ctx.GetService<IMyService>() let configNo = settings.Value.MyValue let serviceNo = myService.GetNumber() let greeting = sprintf "hello world %d %d" configNo serviceNo text greeting next ctxI’ve create a

IMyServiceinterface and a class to implement itRealMyService.Then in

configureServicesI’ve added it as a singleton:services.AddSingleton<IMyService>(new RealMyService()) |> ignoreNow the tests fail again because

42is appended to the results.To make the tests pass, I want to pass in a mocked

IMyServicethat has a number that I want.let luckyNumber = 8 type FakeMyService() = interface IMyService with member _.GetNumber() = luckyNumber let configureTestServices (services: IServiceCollection) = services.AddSingleton<IMyService>(new FakeMyService()) |> ignore () let createTestHost () = WebHostBuilder() .UseTestServer() .ConfigureAppConfiguration(configureAppConfig) // Use the test's config .Configure(configureApp) // from the "Site" project .ConfigureServices(configureServices) // from the "Site" project .ConfigureServices(configureTestServices) // mock services after real onesThen in the tests I can expect the

luckyNumber:let expected = sprintf "hello world %d %d" expectedNumber luckyNumberAnd everything passes.

Conclusion

I hope this contains a few useful tips (if nothing else, I’ll probably be coming back to it in time to remember how to do some of these things) for getting going with Giraffe development in 2022.

You can see the full source code for this blog post here.

-

Access modifiers

This post is inspired by and in response to Pendulum swing: internal by default by Mark Seemann.

Access modifiers in .NET can be used in a number of ways to achieve things, in this post I’ll talk about how I used them and why.

Firstly I should point out, I am NOT a library author, if I were, I may do things differently.

Public and Internal classes

In .NET the

publicandinternalaccess modifiers control the visibility of a class from another assembly. Classes that are marked as public can be seen from another project/assembly, and those that are internal cannot.I view public as saying, “here is some code for other people to use”. When I choose to make something public, I’m making a conscious decision that I want another component of the system to use this code. If they are dependant on me, then this is something I want them to consume.

For anything that is internal, I’m saying, this code is part of my component that only I should be using.

When writing code within a project, I can use my public and internal types interchangeably, there is no difference between them.

If in my project I had these 2 classes:

public Formatter { public void Format(); } internal NameFormatter { public void Format(); }and I was writing code elsewhere in my project, then I can choose to use either of them - there’s nothing stopping or guiding me using one or the other. There’s no encapsulation provided by the use of internal.

NOTE: When I say ‘I’, I actually mean, a team working on something of significant complexity, and that not everyone working on the code may know it inside out. The objective is to make it so that future developers working on the code “fall into the pit of success”.

If my intention was that

NameFormattermust not be used directly, I may use a different approach to “hide” it. For example a private nested class:public Formatter { private class NameFormatter() { } }or by using namespaces:

Project.Feature.Formatter Project.Feature.Formatters.NameFormatterThese might not be the best approach, just a few ideas on how to make them less “discoverable”. The point I’m hoping to make is that within your own project internal doesn’t help, if you want to encapsulate logic, you need to use private (or protected).

In larger systems where people are dependant on my project, everything is internal by default, and only made public to surface the specific features they need.

Testing

So where does this leave me with unit testing? I am quite comfortable using

InternalsVisibleToto allow my tests access to the types it needs to.The system I work on can have a lot of functionality that is

internaland only triggered by its own logic. Such as a plugin that is loaded for a UI, or a message processor.Testing everything through a “Receive Message” type function could be arduous. That said, I do like “outside-in” testing and I can test many things that way, but it is not reasonable to test everything that way.

In one of the systems I maintain, I do test a lot of it this way:

Arrange Putting the system in a state Act Sending an input into the system Assert Observe the outputs are what is expectedBy sending inputs and asserting the outputs tells me how the system works.

However, some subcomponents of this system are rather complex on their own, such as the RFC4517 Postal Address parser I had to implement. When testing this behaviour it made much more sense to test this particular class in isolation with a more “traditional” unit test approach, such as Xunit.net’s Theory tests with a simple set of Inputs and Expected outputs.

I wouldn’t have wanted to make my parser public, it wasn’t part of my component my dependants should care about.

I hope to write more about my testing approaches in the future.

Another use case

For reasons I won’t go into, in one of the systems I work on a single “module” is comprised of a number of assemblies/projects, and the system is comprised of many modules. For this we use “InternalsVisibleTo” only so that the projects in the same module can see each other - in addition to unit testing as stated above.

This allows a single module to see everything it needs to, but dependant modules to only see what we choose to make visible. Keeping a small and focused API helps you know what others depend on and what the impact of your changes are.

Static Analysis

When you use static analysis like .NET Analysers they make assumptions about what your code’s purpose is based on the access modifier. To .NET Analysers, public code is library code, to be called by external consumers.

A few examples of things only apply to public class:

- Argument validation - you must check arguments are not null (also see below)

- Correct (or formal)

IDisposableimplementation. - Spelling checks

The options you have are disable these rules, suppress them, or add the requisite code to support them.

- Disabling the rules, means you don’t get the benefit of the analysis on any public code you may have that was written for use by external callers.

- Suppressing them is messy, and you should justify them so you remember why you disabled it.

- Adding requisite code is arduous. e.g. Guards against nulls.

When you are using Nullable Reference Types from C# 8.0 the compiler protects you from accidentally dereferencing null. But

publicmeans that anyone can write code to call it, so it errs on the side of caution and still warns you that arguments may be null and you should check them.Wrapping up

Given the limited value within a project of using

public, I always default tointernaland will test against internal classes happily, only usingpublicwhen I think something should be part of a public API to another person or part of the system.Internal types are only used by trusted and known callers. Nullable Reference type checking works well with them, as it knows they can only instantiated from within known code, allowing a more complete analysis.

If you are writing code for that is to be maintained for years to come by people other than yourself, using public or internal won’t help, you need to find other approaches to ensure that code is encapsulated and consumed appropriately.

-

SnowPi in F#

This post is part of the F# Advent Calendar 2020. Many thanks to Sergey Tihon for organizing these. Go checkout the other many and excellent posts.

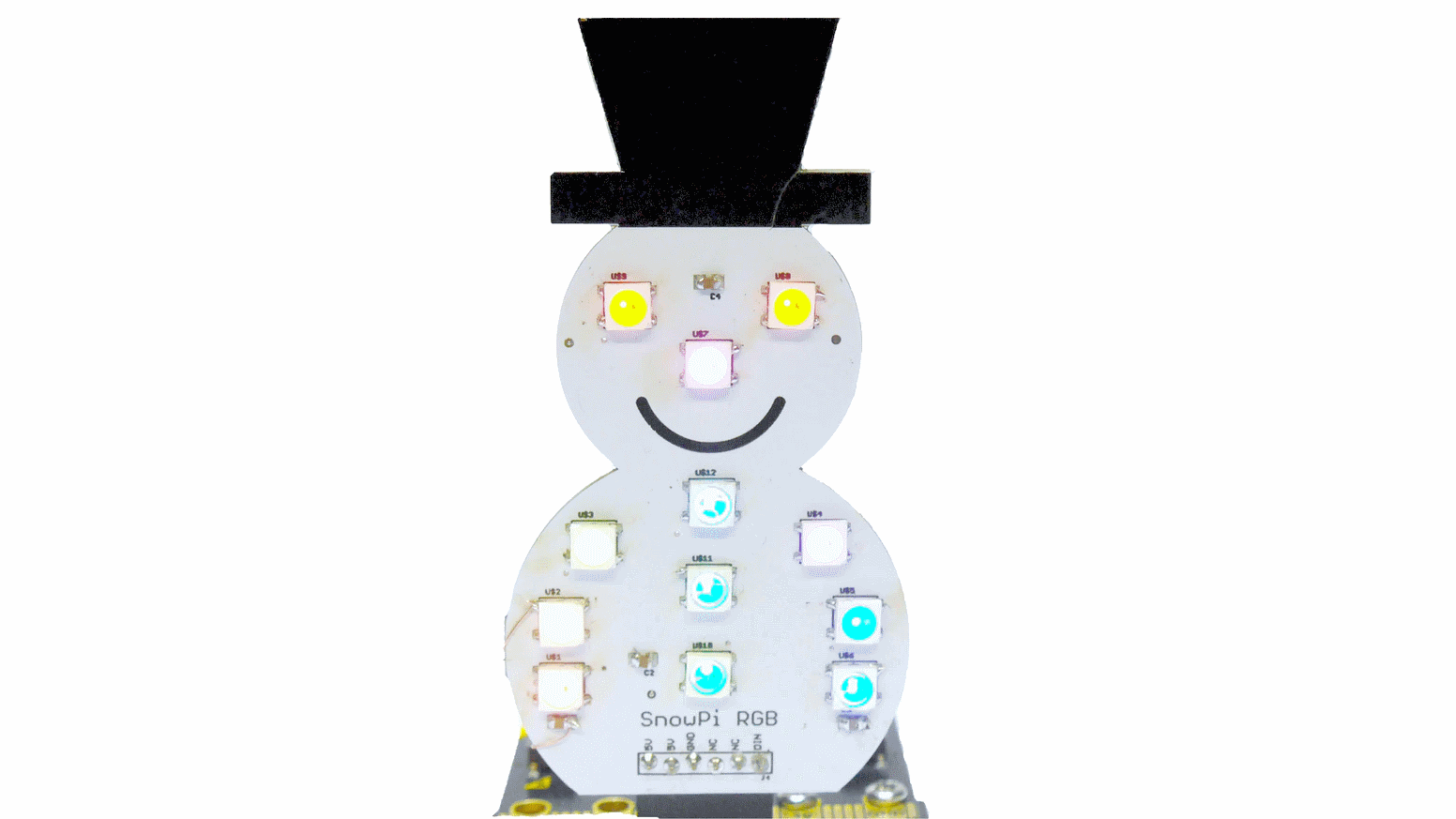

SnowPi RGB

Back in July I got an email from KickStarter about a project for an RGB Snowman that works on Raspberry Pi’s and BBC micro:bits. My daughter loves building things on her micro:bit, and loves all things Christmassy, so I instantly backed it…

image from the KickStarter campaign

A few months later (and now in the proper season) my daughter has had her fun programming it for the micro:bit. Now it is my turn, and I thought it would make a good Christmas post if I could do it in F# and get it running on a Raspberry Pi with .NET Core / .NET.

Most of my Raspberry Pi programming so far has been with cobbled together Python scripts with little attention for detail or correctness, I’ve never run anything .NET on a Raspberry Pi.

This is my journey to getting it working with F# 5 / .NET 5 and running on a Raspberry Pi.

Getting going

After my initial idea, next came the question, “can I actually do it?”. I took a look at the Python demo application that was created for the SnowPi and saw it used

rpi_ws281x, a quick google for “rpi_ws281x .net” and, yep, this looks possible.However, that wasn’t to be. I first tried the popular ws281x.Net package from nuget, and despite following the instructions to setup the native dependencies, I managed to get from

Seg Fault!toWS2811_ERROR_HW_NOT_SUPPORTED, which seemed to indicate that my RPi 4 wasn’t supported and that I needed to update the native libraries. I couldn’t figure this out and gave up.I then tried rpi-ws281x-csharp which looked newer, and even with compiling everything from source, I still couldn’t get it working.

Getting there

After some more digging I finally found Ken Sampson had a fork of rpi-ws281x-csharp which looked newer than the once I used before, and it had a nuget package.

This one worked!

I could finally interact with the SnowPi from F# running in .NET 5. But so far all I had was “turn on all the lights”.

Developing

The problem with developing on a desktop PC and testing on an RPi is that it takes a while to build, publish, copy and test the programs.

I needed a way to test these easier, so I decided to redesign my app to use Command Objects and decouple the instructions from the execution. Now I could provide an alternate executor for the Console and see how it worked (within reason) without deploying to the Raspberry Pi.

Types

As with most F# projects, first, I needed some types.

The first one I created was the Position to describe in English where each LED was so I didn’t have to think too hard when I wanted to light one up.

type Position = | BottomLeft | MiddleLeft | TopLeft | BottomRight | MiddleRight | TopRight | Nose | LeftEye | RightEye | BottomMiddle | MiddleMiddle | TopMiddle static member All = Reflection.FSharpType.GetUnionCases(typeof<Position>) |> Seq.map (fun u -> Reflection.FSharpValue.MakeUnion(u, Array.empty) :?> Position) |> Seq.toListThe

Allmember is useful when you need to access all positions at once.I then created a Pixel record to store the state of a LED (this name was from the Python API to avoid conflicts with the

rpi_ws281xtype LED), and a Command union to hold each of the commands you can do with the SnowPi:type Pixel = { Position: Position Color : Color } type Command = | SetLed of Pixel | SetLeds of Pixel list | Display | SetAndDisplayLeds of Pixel list | Sleep of int | ClearSome of the Commands (

SetLedvsSetLedsandSetAndDisplayLedsvsSetLeds; Display) are there for convenience when constructing commands.Programs

With these types I could now model a basic program:

let redNose = { Position = Nose Color = Color.Red } let greenEyeL = { Position = LeftEye Color = Color.LimeGreen } // etc. Rest hidden for brevity let simpleProgram = [ SetLeds [ redNose; greenEyeL; greenEyeR ] Display Sleep 1000 SetLeds [ redNose; greenEyeL; greenEyeR; topMiddle ] Display Sleep 1000 SetLeds [ redNose; greenEyeL; greenEyeR; topMiddle; midMiddle; ] Display Sleep 1000 SetLeds [ redNose; greenEyeL; greenEyeR; topMiddle; midMiddle; bottomMiddle; ] Display Sleep 1000 ]This is an F# List with 12 elements, each one corresponding to a Command to be run by something.

It is quite east to read what will happen, and I’ve given each of the Pixel values a nice name for reuse.

At the moment nothing happens until the program is executed:

The

executefunction takes a list of commands then examines the config to determine which interface to execute it on.Both Real and Mock versions of

executehave the same signature, so I can create a list of each of those functions and iterate through each one calling it with thecmdsarguments.let execute config cmds name = [ if config.UseSnowpi then Real.execute if config.UseMock then Mock.execute ] // (Command list -> Unit) list |> List.iter (fun f -> Colorful.Console.WriteLine((sprintf "Executing: %s" name), Color.White) f cmds)The

configargument is partially applied so you don’t have to pass it every time:let config = createConfigFromArgs argv let execute = execute config // I would have used `nameof` but Ionide doesn't support it at time of writing. execute simpleProgram "simpleProgram"Mock

The “Mock” draws a Snowman on the console, then does a write to each of the “Pixels” (in this case the Cursor is set to the correct X and Y position for each

[ ]) in the correct colour using Colorful.Console library to help.[<Literal>] let Snowman = """ ############### ############# ########### ######### ################# / \ / [ ] [ ] \ | | \ [ ] / \ / / \ / [ ] \ / [ ] [ ] \ / [ ] \ | [ ] [ ] | \ [ ] / \[ ] [ ]/ \_____________/ """The implementation is quite imperative, as I needed to match the behaviour of the Native library in “Real”. The

SetLedandSetLedscommands push aPixelinto aResizeArray<Command>(System.Collections.Generic.List<Command>) and then aRendercommand instructs it to iterates over each item in the collection, draws the appropriate “X” on the Snowman in the desired colour, and then clear the list ready for the next render.let private drawLed led = Console.SetCursorPosition (mapPosToConsole led.Position) Console.Write('X', led.Color) let private render () = try Seq.iter drawLed toRender finally Console.SetCursorPosition originalPosThis is one of the things I really like about F#, it is a Functional First language, but I can drop into imperative code whenever I need to. I’ll combe back to this point again later.

Using

dotnet watch runI can now write and test a program really quickly.

Real SnowPi

Implementing the “real” SnowPi turned out to be trivial, albeit imperative.

Just following the examples from the GitHub repo of the rpi-ws281x-csharp in C# and porting it to F## was enough to get me going with what I needed.

For example, the following snippet is nearly the full implementation:

open rpi_ws281x open System.Drawing let settings = Settings.CreateDefaultSettings(); let controller = settings.AddController( controllerType = ControllerType.PWM0, ledCount = NumberOfLeds, stripType = StripType.WS2811_STRIP_GRB, brightness = 255uy, invert = false) let rpi = new WS281x(settings) //Call once at the start let setup() = controller.Reset(); //Call once at the end let teardown() = rpi.Dispose() let private setLeds pixels = let toLedTuple pixel = (posToLedNumber pixel.Position, pixel.Color) pixels |> List.map toLedTuple |> List.iter controller.SetLED let private render() = rpi.Render()The above snipped gives most of the functions you need to execute the commands against:

let rec private executeCmd cmd = match cmd with | SetLed p -> setLeds [p] | SetLeds ps -> setLeds ps | Display -> render () | SetAndDisplayLeds ps -> executeCmd (SetLeds ps) executeCmd Display | Sleep ms -> System.Threading.Thread.Sleep(ms) | Clear -> clear ()Other Programs

Just to illustrate composing a few programs, I’ll post a two more, one simple traffic light I created and one I copied from the Demo app in the Python repository:

Traffic Lights

This displays the traditional British traffic light sequence. First, by creating lists for each of the pixels and their associated colours (

createPixelsis a simple helper method). By appending the red and amber lists together, I can combine both red and amber pixels into a new list that will display red and amber at the same time.let red = [ LeftEye; RightEye; Nose] |> createPixels Color.Red let amber = [ TopLeft; TopMiddle; TopRight; MiddleMiddle ] |> createPixels Color.Yellow let green = [ MiddleLeft; BottomLeft; BottomMiddle; MiddleRight; BottomRight ] |> createPixels Color.LimeGreen let redAmber = List.append red amber let trafficLights = [ Clear SetAndDisplayLeds green Sleep 3000 Clear SetAndDisplayLeds amber Sleep 1000 Clear SetAndDisplayLeds red Sleep 3000 Clear SetAndDisplayLeds redAmber Sleep 1000 Clear SetAndDisplayLeds green Sleep 1000 ]The overall program is just a set of commands to first clear then set the Leds and Display them at the same time, then sleep for a prescribed duration, before moving onto the next one.

Colour Wipe

This program is ported directly from the Python sample with a slight F# twist:

let colorWipe col = Position.All |> List.sortBy posToLedNumber |> List.collect ( fun pos -> [ SetLed { Position = pos; Color = col } Display Sleep 50 ]) let colorWipeProgram = [ for _ in [1..5] do for col in [ Color.Red; Color.Green; Color.Blue; ] do yield! colorWipe colThe

colorWipefunction sets each Led in turn to a specified colour, displays it, waits 50ms, and moves onto the next one.List.collectis used to flatten the list of lists of commands into just a list of commands.The

colorWipeProgramrepeats this 5 times, but each time uses a different colour in the wipe. Whilst it may look imperative, it is using list comprehensions and is still just building commands to execute later.Full project

The entire project is on GitHub here, if you want to have a look at the full source code and maybe even get a SnowPi and try it out.

Summing up

The project started out fully imperative, and proved quite hard to implement correctly, especially as I wrote the mock first, and implemented the real SnowPi. The mock was written with different semantics to the the real SnowPi interface, and had to be rewritten a few times.

Once I moved to using Commands and got the right set of commands, I didn’t have to worry about refactoring the programs as I tweaked implementation details.

The building of programs from commands is purely functional and referentially transparent. You can see what a program will do before you even run it. This allowed me to use functional principals building up the programs, despite both implementations being rather imperative and side effect driven.

Going further, if I were to write tests for this, the important part would be the programs, which I could assert were formed correctly, without ever having to render them.

-

Xmas List Parser

This post is part of the F# Advent Calendar 2019. Many thanks to Sergey Tihon for organizing these.

Last year I wrote an app for Santa to keep track of his list of presents to buy for the nice children of the world.

Sadly, the development team didn’t do proper research into Santa’s requirements; they couldn’t be bothered with a trek to the North Pole and just sat at home watching “The Santa Clause” and then reckoned they knew it all. Luckily no harm came to Christmas 2018.

Good news is, Santa’s been in touch and the additional requirements for this year are:

- I don’t want to retype all the bloomin’ letters.

- I’d like to send presents to naughty children.

The Problem

This year I’m going to walk through how you can solve Santa’s problem using something I’ve recently began playing with - FParsec.

FParsec is parser combinator library for F#.

I’d describe it as: a library that lets you write a parser by combining functions.

This is only my second go at using it, my first was to solve Mike Hadlow’s “Journeys” coding challenge. So this might not be the most idiomatic way to write a parser.

We’ll assume that Santa has bought some off the shelf OCR software and has scanned in some Christmas lists into a text file.

Example

Alice: Nice - Bike - Socks * 2 Bobby: Naughty - Coal Claire:Nice -Hat - Gloves * 2 - Book Dave : Naughty - NothingAs you can see the OCR software hasn’t done too well with the whitespace. We need a parser that is able to parse this into some nice F# records and handle the lack of perfect structure.

Domain

When writing solutions in F# I like to model the domain first:

module Domain = type Behaviour = Naughty | Nice type Gift = { Gift: string Quantity: int } type Child = { Name: string Behaviour: Behaviour Gifts: Gift list }First the

Behaviouris modelled as a discriminated union: eitherNaughtyorNice.A record for the

Giftholds the name of a gift and the quantity.The

Childrecord models the name of the child, their behaviour and a list of gifts they are getting. The overall output of a successfully parsing the text will be a list ofChildrecords.Parsing

Initially I thought it would be a clever idea to parse the text directly into the domain model. That didn’t work out so, instead I defined my own AST to parse into, then later map that into the domain model.

type Line = | Child of string * Domain.Behaviour | QuantifiedGift of string * int | SingleGift of stringA

Childline represents a child and theirBehaviourthis year. AQuantifiedGiftrepresents a gift that was specified with a quantity (e.g. “Bike * 2”) and aSingleGiftrepresents a gift without a quantity.Modelling this way avoids putting domain logic into your parser - for example, what is the quantity of a single gift? It might seem trivial, but the less the parser knows about your domain the easier it is to create.

Before we get into the actual parsing of the lines, there’s a helper I added called

wsAround:open FParsec let wsAround c = spaces >>. skipChar c >>. spacesThis is a function that creates a parser based on a single character

cand allows the charactercto be surrounded by whitespace (spacesfunction). TheskipCharfunction says that I don’t care about parsing the value ofc, just thatchas to be there. I’ll go into the>>.later on, but it is one of FParsec’s custom operators for combining parsers.So

wsAround ':'lets me parse:with potential whitespace either side of it.It can be used as part of parsing any of the following:

a : b a:b a: bAnd as the examples above show, there are a few places where we don’t care about whitespace either side of a separator:

- Either side of the

:separating the name and behaviour. - Before/after the

-that precedes either types of gift. - Either side of the

*for quantified gifts.

Parsing Children

A child line is defined as “a name and behaviour separated by a

:”.For example:

Dave : NiceAnd as stated above, there can be any amount (or none) of whitespace around the

:.The

pNamefunction defines how to parse a name:let pName = let endOfName = wsAround ':' many1CharsTill anyChar endOfName |>> stringmany1CharsTillis a parser that runs two other parsers. The first argument is the parser it will look for “many chars” from, the second argument is the parser that tells it when to stop.Here it parses any character using

anyCharuntil it reaches theendOfNameparser, which is a function that looks for:with whitespace around it.The result of the parser is then converted into a

stringusing the|>>operator.The

pBehaviourfunction parses naughty or nice into the discriminated union:let pBehaviour = (pstringCI "nice" >>% Domain.Nice) <|> (pstringCI "naughty" >>% Domain.Naughty)This defines 2 parsers, one for each case, and uses the

<|>operator to choose between them.pstringCI "nice"is looking to parse the stringnicecase-insensitive and then the>>%operator discards the parsed string and just returnsDomain.Nice.These 2 functions are combined to create the

pChildfunction that can parse the full line of text into aChildline.let pChild = let pName = //... let pBehaviour = //... pName .>>. pBehaviour |>> ChildpNameandpBehaviourare combined with the.>>.operator to create a tuple of each parsers result, then the result or that is passed to theChildline constructor by the|>>operator.Parsing Gifts

Both gifts make use of the

startOfGiftNameparser function:let startOfGiftName = wsAround '-'A single gift is parsed with:

let pSingleGift = let allTillEOL = manyChars (noneOf "\n") startOfGiftName >>. allTillEOL |>> SingleGiftThe

allTillEOLfunction was taken from this StackOverflow answer and parses everything up to the end of a line.This is combined with

startOfGiftNameusing the>>.operator, which is similar to the.>>.operator, but in this case I only want the result from the right-hand side parser - in this case theallTillEOL, this is then passed into theSingleGiftunion case constructor.A quantified gift is parsed with:

let pQuantifiedGift = let endOfQty = wsAround '*' let pGiftName = startOfGiftName >>. manyCharsTill anyChar endOfQty pGiftName .>>. pint32 |>> QuantifiedGiftThis uses

endOfQtyandpGiftNamecombined in a similar way to thepNameinpChild. Parsing all characters up until the*and only keeping the name part.pGiftNameis combined withpint32with the.>>.function to get the result of both parsers in a tuple and is fed into theQuantifiedGiftunion case.Putting it all together

The top level parser is

pLinewhich parses each line of the text into one of the cases from theLinediscriminated union.let pLine = attempt pQuantifiedGift <|> attempt pSingleGift <|> pChildThis uses the

<|>that was used for theBehaviour, but it also requires theattemptfunction before the first two parsers. This is because these parsers consume some of the input stream as they execute. Without theattemptit would start on a quantified gift, then realise it is actually a single gift and have no way to go into the next choice. Usingattemptallows the parser to “rewind” when it has a problem - like a quantified gift missing a*.If you want to see how this works, you need to decorate your parser functions with the

<!>operator that is defined here. This shows the steps the parser takes and allows you to see that it has “gone the wrong way”.Finally a helper method called

parseInputis used to parse the entire file:let parseInput input = run (sepBy pLine newline) inputThis calls the

runfunction passing in asepByparser for eachpLineseparated by anewline. This way each line is processed on it’s own.That is the end of the parser module.

Mapping to the Domain

The current output of

parseInputis aParserResult<Line list, unit>. Assuming success there is now a list ofLineunion cases that need to be mapped into a list ofChildfrom the domain.These have separate structures:

- A

Childrecord is hierarchical - it contains a list ofGifts. - The list of

Lines has structure defined by the order of elements,Gifts follow theChildthey relate to.

Initially I thought about using a

foldto go through each line, if the line was a child, add a child to the head of the results, if the line was a gift add it to the head of the list of gifts of the first child in the list, this was the code:let folder (state: Child list) (line : Line) : Child list = let addGift nm qty = let head::tail = state let newHead = { head with Gifts = {Gift = nm; Quantity = qty; } :: head.Gifts; } newHead :: tail match line with | Child (name, behaviour) -> { Name = name; Behaviour = behaviour; Gifts = []; } :: state | SingleGift name -> addGift name 1 | QuantifiedGift (name, quantity) -> addGift name quantityThis worked, but because F# lists are implemented as singly linked lists you add to the head of the list instead of the tail. This had the annoying feature that the

Childitems were revered in the list - not so bad, but then the list of gifts in each child was backwards too. I could have sorted both lists, but it would require recreating the results as the lists are immutable and I wanted to keep to idiomatic F# as much as I could.A

foldBackon the other hand works backwards “up” the list, which meant I could get the results in the order I wanted, but there was a complication. When going forward, the first line was always a child, so I always had a child to add gifts to. Going backwards there is just gifts until you get to a child, so you have to maintain a list of gifts, until you reach a child line, then you can create a child assign the gifts, then clear the list.This is how I implemented it:

module Translation = open Domain open Parser let foldLine line state = //Line -> Child list * Gift list -> Child list * Gift list let cList, gList = state let addChild name behaviour = { Name = name; Behaviour = behaviour; Gifts = gList; } :: cList let addGift name quantity = { Gift = name; Quantity = quantity; } :: gList match line with | Child (name, behaviour) -> addChild name behaviour, [] | SingleGift name -> cList, addGift name 1 | QuantifiedGift (name, quantity) -> cList, addGift name quantityThe

stateis a tuple of lists, the first for theChild list(the result we want) and the second for keeping track of the gifts that are not yet assigned to children.First this function deconstructs

stateinto the child and gift lists -cListandgListrespectively.Next I’ve declared some helper functions for adding to either the

ChildorGiftlist:addChildcreates a newChildwith theGiftsset to the accumulated list of Gifts (gList) and prepends it ontocList.addGiftcreates a newGiftand prepends it ontogList.

Then the correct function is called based on the type of Line.

- Children return a new

Child listwith a EmptyGift list. - The gifts return the existing

Child list, with the current item added to theGift list.

The overall result is a tuple of all the

Childrecords correctly populated, and an empty list ofGiftrecords, as the last item will be the first row and that will be aChild.let mapLinesToDomain lines = //ParserResult<Line list, unit> -> Child list let initState = [],[] let mapped = match lines with | Success (lines, _, _) -> Seq.foldBack foldLine lines initState | Failure (err, _, _) -> failwith err fst mappedFinally, the output of

parseInputcan be piped intomapLinesToDomainto get theChild listwe need:let childList = Parser.parseInput input //Input is just a string from File.ReadAllText |> Translation.mapLinesToDomainSumming up

I really like how simple parsers can be once written, but it takes some time to get used to how they work and how you need to separate the parsing and domain logic.

My main pain points were:

- Trying to get the domain model in the parser - adding Gifts to Children, setting default quantity to 1, etc resulted in a lot of extra code. Once I stopped this and just focussed on mapping to the AST it was much simpler. Another benefit was not having to map things into Records, just using tuples and discriminated unions allowed a much cleaner implementation.

- Not knowing about using

attempt, I just assumed<|>worked like pattern matching, turns out, it doesn’t.

I made heavy use of the F# REPL and found it helped massively as I worked my way through writing each parser and then combining them together. For example, I first wrote the Behaviour parser and tested it worked correctly on just “Naughty” and “Nice”. Then I wrote a parser for the Child’s name and

:and tested it on “Dave : Nice”, but only getting the name. Then I could write a function to combine the two together and check that the results were correct again. The whole development process was done this way, just add a bit more code, bit more example, test in the REPL and repeat.The whole code for this is on GitHub - it is only 115 lines long, including code to print the list of Children back out so I could see the results.

-

Setting up my Ubiquiti Network

For a while now, I’ve been having problems with my Virgin Media Super Hub 3 and the Wifi randomly dropping out. At first I attributed it to bad devices (old 2Ghz stuff), and wasn’t that bothered as I mostly used a wired connection on my Desktop PC. However, since moving house I’m unable to use a wired connection - my PC and the Fibre are in opposite corners of the house - and even with a brand new Wifi Card, I’ve been experiencing the same problems. Another issue was that I could only get 150Mbps over Wifi - when I’m paying for 200Mbps.

I could have gone to Virgin Media support and requested a replacement, it would probably have been some hassle, but I’m sure they would have sorted it eventually.

But, I still wanted something a bit better than what their standard Router/Wifi could offer, so it was time for an overhaul.

After seeing some blogs on people implementing Ubiquiti products in their house, I thought I’d give it a go.

I didn’t buy all that, but it’s pretty looking stuff

I’ll be the first to admit that I’m never the best at buying things online, and I’m no networking expert. So I ended up buying what I thought was enough bits - and technically it was - without proper research.

What initially I bought was:

- AC-PRO (Wifi)

- USG (Router)

- Cloud Key (a way to manage everything)

The first problem I came across was that I didn’t have enough Ethernet cables in my house (thrown away during the move). So I borrowed a couple from the office, and liberated one from another device in the house.

With just 4 Ethernet cables I just about managed to get everything setup, but it wasn’t pretty.

Initially I setup the USG, and then added the AC-PRO. To do this I had to setup the Controller Software on my Desktop, then I got around to setting up the Cloud Key, and then realised that it worked as the Controller instead of what is on my Desktop, so had to start all over again.

I really struggled to get everything on the same LAN and keep internet connected - at times I had to remove the Ethernet cable providing Internet so I could connect a Computer to the LAN to setup the Wifi, then with the Wifi setup I could disconnect the Ethernet cable to reconnect the Internet.

Lesson 1

Have enough Ethernet Cables before you start!

Lesson 2

Check how everything will connect together - I foolishly thought that the USG had built-in Wifi and the AC-PRO was a booster.

The little research I did online said you could use just the three devices without a switch, but I don’t see how people managed.

In the end I used the 3 spare ports on the VM Superhub (whilst in modem mode) as a switch for the AC-PRO, USG and Cloud Key.

Lesson 3

Setup the Cloud Key before anything else. Don’t download the Controller and start Adopting all the devices to then realise you can do it all on the Cloud Key.

The Problems

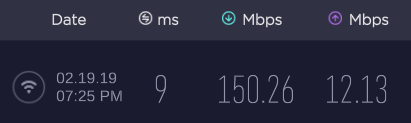

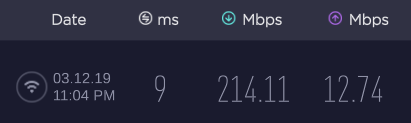

I was happy everything worked. I could get 200Mbps + speeds over Wifi again - something I wasn’t able to do with the SuperHub:

Before

After

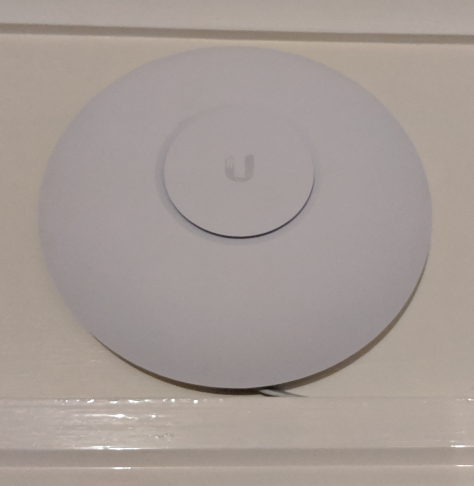

The problem I had now was that the AC-PRO was in a corner with everything else meaning I wasn’t getting the best range, ideally I wanted it the middle of my house. Moving it would require a power and Ethernet cable running the 10M+ to it, as well the the Power Adaptor, which would be ugly and not pass the Spouse Approval Test.

I also had an abundance of things plugged in in that corner, so I needed a way to move it and make it pretty.

Solution

I decided to fork out a little more money and get a Ubiquiti switch with PoE (power over ethernet) coming out from it so that I could power the AC-PRO (and Cloud Key) without a power cable.

As those are the only 2 requirements for PoE I got a:

- US-8-60W

to add into the mix.

That provides 4 PoE ports, and is capable of powering a Cloud Key and AC-PRO.

Now I have my AC-PRO connected via a Flat White CAT7 cable, and not looking ugly at all.

I love how you cannot see the wire above the doorway unless you really look.

The rest of the devices are wired up with Flat Black CAT7 cables (except the Tivo).

End Result

I’m really happy with the performance of everything, and the setup was really easy - except for my own failings above. Adding the switch in was just plug-in, go to the web interface and press “Adopt”.

The devices I have connected at the moment are:

VM Router (modem) | |--------------------USG | | | □□□▣ □□□□ US-8-60W (switch) | | ------------------------|-------------------- | | | | | PS4 Tivo Pi Hole Cloud Key | ▣□□□ □□□□ □▣□□ □□□□ □□□□ □□▣□ □□□□ □□□▣ | | | AC-PRO (wifi) □□□□ □▣□□The Management via the Cloud Key / Controller is awesome. There are so many settings and it is so easy to control everything. I’ve not had a proper play yet, but so far my favourite feature is been able to assign Alias’s to devices so I know what everything is - most phones just show up as MAC addresses on the Superhub. Simple things like that always make me happy.

Final thoughts

I started writing this post a few months ago, but due to the stresses of moving house, it’s taken me 6 months to complete. But now I’ve had some time running with the above setup I can say that it is rock solid. I’ve had no problems, and no complaints from the family either - you know you got it right if they don’t complain.

Changes since I started:

- I’ve added a pi-hole to my network to block ads on mobile devices. This is something I wouldn’t have been able to do on the VM router, as I could not assign DNS to the DHCP clients, and manually changing it per device would not have been acceptable.

- I’ve installed the Unifi Network app on my phone to help manage it when I’m away.

- I’ve turned off the blue glow on the AC-PRO - it’s pretty, but it did make the house glow all night.

Other than that, I’ve just been applying the odd updates and keeping an eye on things.

If anyone is thinking about getting setup with this, feel free to reach out to discuss, I can share what little I know and maybe save you from my mistakes :)

-

Deploy a website for a Pull Request

Some of my colleagues shared a great idea the other day for one of our internal repositories…

Spin up a new website for each PR so you can see what the finished site will look like.

I really like this idea, so I thought I’d change the Fable Xmas List to deploy a new version on each PR I submitted.

Note: I’ve only done this for my repository, not Forks.

Previous Setup

The Xmas List code is built and deployed by Azure Pipelines from my Public Azure DevOps to a static website in an AWS S3 Bucket.

The previous process was to only trigger a Build on pushes to

masterand if everything succeeded then a release was triggered automatically to push the latest code into the Bucket.The live site lives on:

https://s3-eu-west-1.amazonaws.com/xmaslist/index.html

Plan

The plan is to deploy each Pull Request to another bucket with a naming convention of:

https://s3-eu-west-1.amazonaws.com/xmaslist-pr-branch-name/index.html

I could have use subfolder in another bucket, but I thought I’d keep it simple here.

The Pipeline for pushes to

masterwill remain unchanged.Implementing

To get this to work, you will need to change the Build and Release pipelines.

Build

The first thing you will need to do is get the name of the Pull Request branch in the Release. At the moment this is only available in the Build via the

SYSTEM_PULLREQUEST_SOURCEBRANCHvariable.I’ll use the

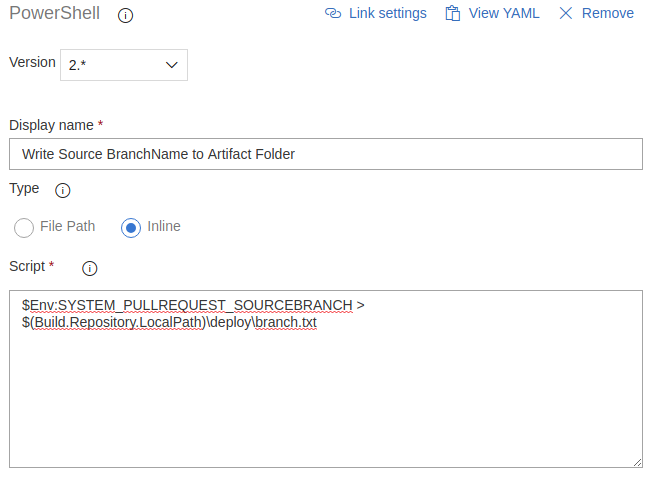

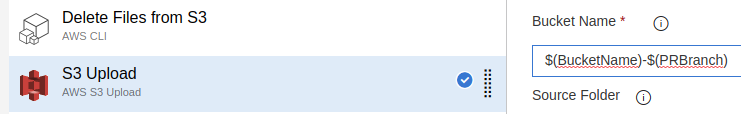

UPPER_CASEversion of the variable names when working in PowerShell and the$(Title.Case) version when working in the Task.To pass the value of a variable from the Build to the Release you will have to add it into the Pipeline Artifact. As I only had a single value to pass, I just used a text file with the value of the variable in it.

I added a PowerShell Task and used an inline script:

The script is:

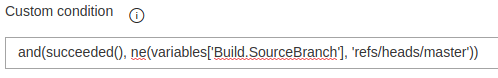

$Env:SYSTEM_PULLREQUEST_SOURCEBRANCH > $(Build.Repository.LocalPath)\deploy\branch.txtdeployis the root of the published websiteTo stop a file been added to the live site deployments, I set the Custom Conditions on the task to:

and(succeeded(), ne(variables['Build.SourceBranch'], 'refs/heads/master'))It only writes the file if the source branch is not equal (

ne) tomaster.The Build will now publish the Pipeline artifact for Pull Requests with the name of the PR branch in a file called

branch.txt.This is a little bit of a pain, but it is the only way I can find.

Note: There is a variable in the Release Pipeline called

Release.Artifacts.{alias}.SourceBranchNamebut in a Pull Request this is set tomerge. That is because we build the PR branch ofrefs/pull/5/merge. There isn’t a Pull Request source branch name in Releases at this moment.Releases

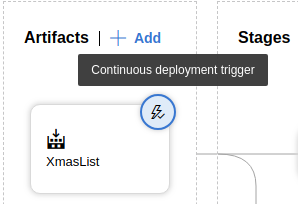

To enable a release on a Pull Request you first need to alter the triggers…

Triggers

Click on the Continuous Deployment Trigger icon

and then Enable the Pull Request Trigger and set the source branch:

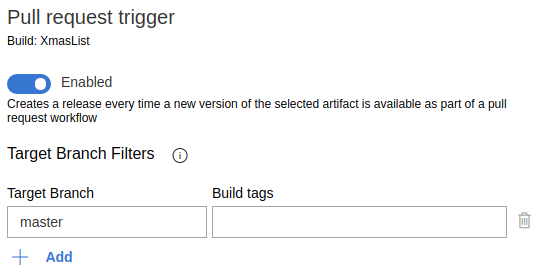

To keep things simple I created a duplicate Stage of the live stage and called it PR Deployment(s) and changed it’s pre-deployment conditions to run on Pull requests:

Stages

With the duplicate stage setup, I needed to add some extra logic to change the bucket path on AWS.

Again, as I was keeping things simple, I just duplicated and changed the stage. I could have created Task Group and made the Tasks conditional, but this way is easier to know what each stage does.

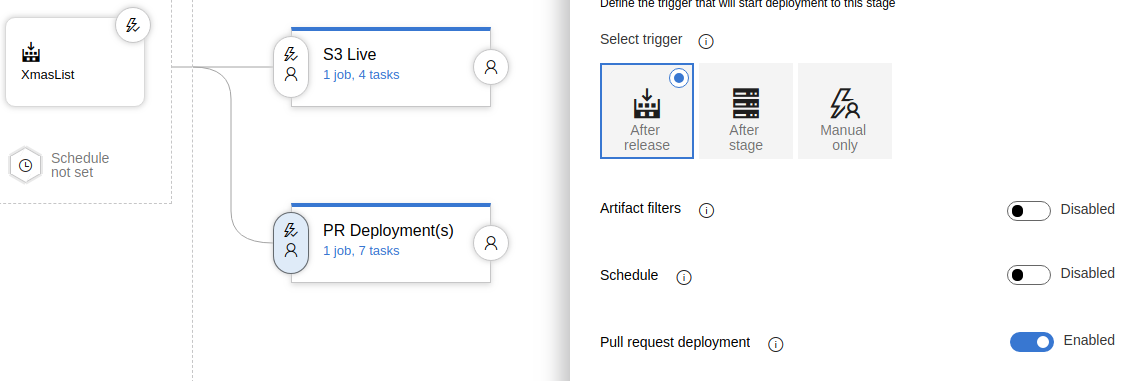

To get the Branch name available to the Agent I needed to get the contents of the

branch.txtfile from the Pipeline Artifact that was created by the build.I added a PowerShell task with an Inline script with the following:

$p = $Env:AGENT_RELEASEDIRECTORY + '\' + $Env:RELEASE_PRIMARYARTIFACTSOURCEALIAS + '\drop\branch.txt' $PRBranch = Get-Content $p -Raw del "$p" Write-Host $PRBranch #for debugging Write-Host "##vso[task.setvariable variable=PRBranch;]$PRBranch"This gets the path to

branch.txtinto a variable called$p, reads the entire contents into a variable called$PRBranch, and deletesbranch.txtso it isn’t published.The line

Write-Host "##vso[task.setvariable variable=PRBranch;]$PRBranch"will set a variable called$(PRBranch)in the Build agent, so that I can access it in the AWS tasks later.The final piece is to use this in the S3 tasks:

Note:

$(BucketName)is set toxmaslist.The last thing I added was to write out the URL of the website at the end of the Process so I can just grab it from the logs and try without having to remember the address.

Summary

This is a really nice way to test out any changes on a version of your site before merging a pull request, even it is only for your own PR’s. This will be much more powerful if a team is working on the repository.

There will be many different ways to achieve this, especially if you are using Infrastructure as Code (e.g. ARM Templates on Azure), but this works even on simple static sites.

-

Santa's Xmas List in F# and Fable

This post is part of the F# Advent Calendar 2018. Many thanks to Sergey Tihon for organizing these.

So this year I decided to write something for the F# Advent Calendar, and even though I picked a date far enough in the future, panic still set in. I’m not one for “ideas on demand”, and after a bit of deliberating about Xmas themed games, I finally settled on something that let me explore my favourite parts of F# at the moment:

- Domain Modelling

- Testing

- Event Sourcing

- Fable & Elmish

The Concept

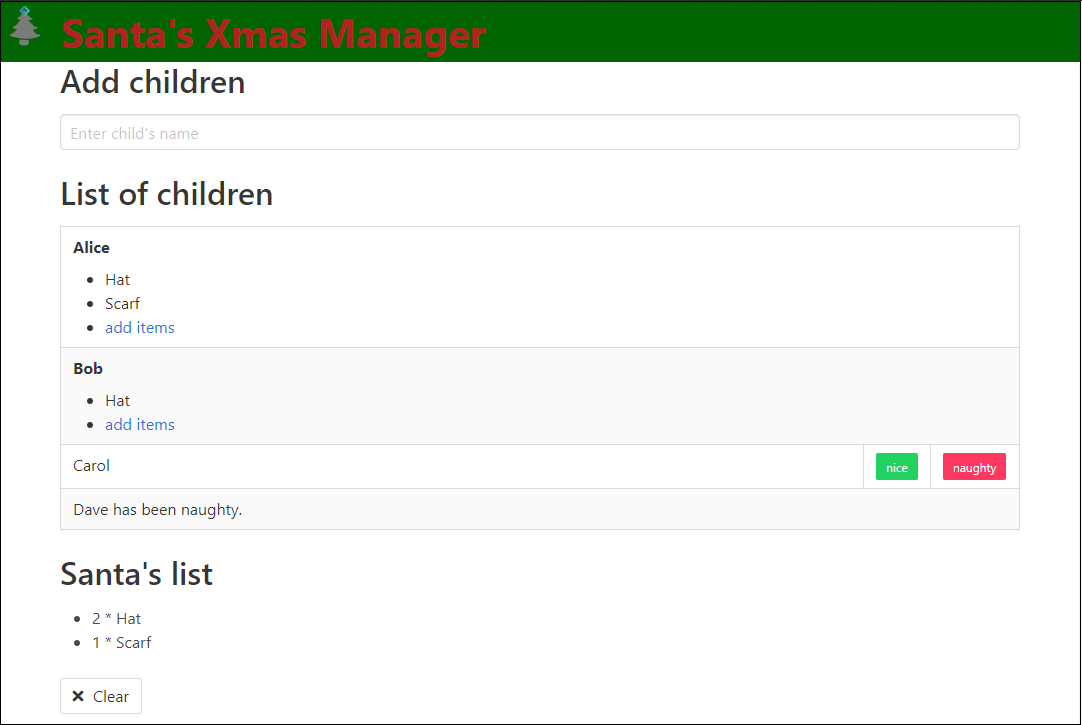

My initial design was for something a bit more complicated, but I scaled it down into simple Web App where Santa can:

- Record children’s names

- Who’s been Naughty and who’s been Nice

- What presents Nice children are getting

- See an overall list of all the presents he needs to sent to the elves

Click here to have a play

The app is written in F#, using Fable, Elmish and Fulma (which I also used to write Monster Splatter) and all the associated tooling in SAFE stack. I did consider writing a back-end for it, but decided to keep things simple.

The Domain Model

A common problem with any Model-View-X architecture is that everything that isn’t POCO (Model) or UI (View) related ends up X, so I look for ways to make sure the Domain logic can be quickly broken out and separated from X.

With Elmish, this was very easy. I began my modelling the Domain and the Operations that can be performed on it:

type Item = { Description: string } type NaughtyOrNice = | Undecided | Nice of Item list | Naughty type Child = { Name: string NaughtyOrNice: NaughtyOrNice } type SantasItem = { ItemName: string Quantity: int } type Model = { CurrentEditor: CurrentEditorState //Not shown ChildrensList: Child list SantasList: SantasItem list } type AddChild = string -> Model -> Model * EventStore.Event type AddItem = string -> Item -> Model -> Model * EventStore.Event type ReviewChild = string -> NaughtyOrNice -> Model -> Model * EventStore.EventThere’s a few things above, so let’s go through the types:

- The

Modelholds a list ofChildrecords andSantaItemrecords. - A child has a name and a Naughty or Nice status. If they are Nice, they can also have a list of Items.

- Santa’s items have a quantity with them.

- I didn’t separate the UI stuff (

CurrentEditor) from the Domain model, this was just to keep things simple.

And the functions:

AddChildtakes in astringfor the childs name as well as the current model and returns an updated model andEvent(see below)AddItemtakes in a child’s name, an item, and the current state and also returns an updated model andEvent.ReviewChildalso takes in a child’s name and if they are naughty or nice, as well as the current state, and guess what, returns an updated model andEvent.- The

Eventis explained in the Event Sourcing section below, but is simple a Union Case representing what just happened.

There’s no need to go into implementation of the Domain, it’s pretty basic, but it is worth pointing out that Adding an item to a Nice child, also adds an item to

SantasList, or increments the quantity of an existing item.Reuse-Reuse-Reuse

The main take away here is that the Domain module contains pure F#, no Fable, no Elmish, just my Domain code. This means if I wanted to run it on my F# Services I could use the exact same file and be guaranteed the exact same results.

Full source can be seen here.

Testing

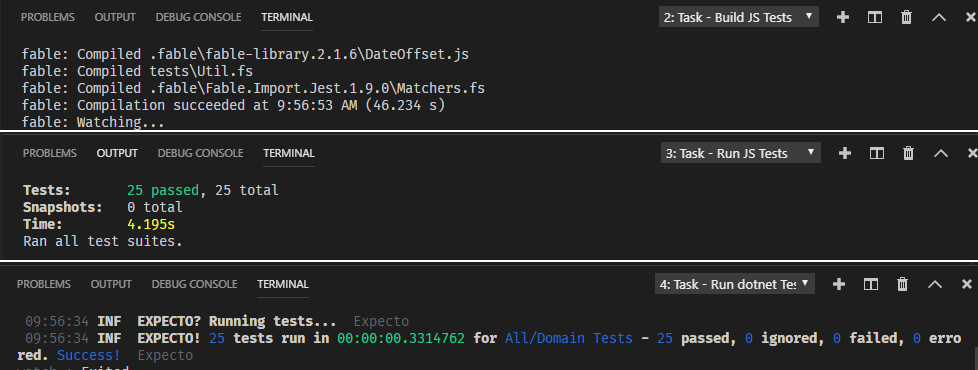

I just said I could be guaranteed the exact same results if I ran this code on my Services… but how?

Fable transpiles my F# into JavaScript and runs it in the browser, how could I know this works the same in .NET Core when run on the server?

The answer is Testing - the only way you can be sure of anything in software.

Using the Fable Compiler’s tests as inspiration and the Fable bindings for Jest, I’ve created a suite of tests that can be run against the generated JavaScript and the compiled .NET code.

As of writing there is a Bug with Fable 2 and the Jest Bindings, but you can work around them.

The trick is to use the

FABLE_COMPILERcompiler directive to produce different code under Fable and .NET.For example the

testCasefunction is declared as:let testCase (msg: string) (test: unit->unit) = msg, box testin Fable, but as:

open Expecto let testCase (name: string) (test: unit -> unit) : Test = testCase name testin .NET Code.

Full source can be seen here.

What this gives me is a test can now be written once and run many times depending how the code is compiled:

testCase "Adding children works" <| fun () -> let child1 = "Dave" let child2 = "Shaw" let newModel = addChild child1 defaultModel |> addChild child2 let expected = [ { Name = child1; NaughtyOrNice = Undecided } { Name = child2; NaughtyOrNice = Undecided } ] newModel.ChildrensList == expectedWhat’s really cool, is how you can run these tests.

The JS Tests took 2 different NPM packages to get running:

- fable-splitter

- Jest

Both of these operated in “Watch Mode”, so I could write a failing test, Ctrl+S, watch it fail a second later. Then write the code to make it pass, Ctrl+S again, and watch it pass. No building, no run tests, just write and Save.

As the .NET tests are in Expecto, I can have the same workflow for them too with

dotnet watch run.I have all 3 tasks setup in VS Code and can set them running with the “Run Test Task” command. See my tasks.json and packages.json files for how these are configured.

I have a CI/CD Pipeline setup in Azure Dev Ops running these tests on both Windows and Ubuntu build agents. That takes 25 written tests to 100 running tests.

Event Sourcing

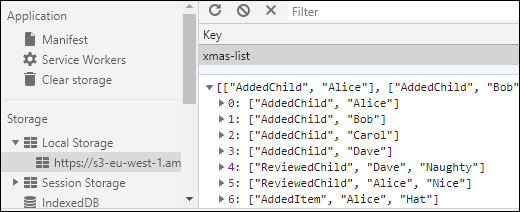

As I decided to avoid building a back-end for this I wanted a way to maintain the state on the client by persisting it into Local Storage in the browser.

Instead of just serializing the current Model into JSON and storing it, I thought I’d try out storing each of the users actions as an Event and then playing them back when the user (re)loads the page.

This isn’t a pure event sourcing implementation, but one that uses events instead of CRUD for persistence. If you want to read a more complete introduction to F# and Event Sourcing, try Roman Provazník’s Advent Post.

Most of the application is operating on the “View / Projection” of the events, instead of the Stream of events.

To model each event I create a simple discriminated union for the

Eventand also used type aliases for all the strings, just to make it clearer what all these strings are:type Name = string type Item = string type Review = string type Event = | AddedChild of Name | AddedItem of Name * Item | ReviewedChild of Name * ReviewThese are what are returned from the Domain model representing what has just changed. They are exactly what the user input, no normalising strings for example.

The “Event Store” in this case is a simple

ResizeArray<Event>(akaList<T>), and each event is appended onto it.Every time an event is appended to the Store, the entire store is persisted into Local Storage. Fable has “bindings” for access local storage which mean you only need to call:

//Save Browser.localStorage.setItem(key, json) //Load let json = Browser.localStorage.getItem(key)

For serialization and deserialization I used Thoth.Json and just used the “Auto mode” on the list of Events.

When the page is loaded all the Events are loaded back into the “Event Store”, but now we need to some how convert them back into the Model and recreate the state that was there before.

In F# this is actually really easy.

let fromEvents : FromEvents = fun editorState events -> let processEvent m ev = let updatedModel, _ = match ev with | EventStore.AddedChild name -> m |> addChild name | EventStore.ReviewedChild (name, non) -> m |> reviewChild name (stringToNon non) | EventStore.AddedItem (name, item) -> m |> addItem name { Description = item } updatedModel let state0 = createDefaultModel editorState (state0, events) ||> List.fold processEventIt starts by declaring a function to process each event, which will be used by the

foldfunction.The

processEventfunction takes in the current statemand the event to processev, matches and deconstructs the values fromevand passes them to the correct Domain function, along with the current model (m) and returns the updated model (ignoring the returned event as we don’t need them here).Next it creates

state0using thecreateDefaultModelfunction - you can ignore theeditorState, as I mentioned above, it has leaked in a little.Then it uses a

foldto iterate over each event, passing in the initial state (state0) and returning a new state. Each time the fold goes through an event in the list, the updated state from the previous iteration is passed in, this is why you need to start with an empty model, which is then built up on with the events.Summing Up

There’s a lot more I could have talked about here:

- How I used Fulma / Font Awesome for the Styling.

- How I used Fable React for the UI.

- How I used Azure Pipelines for the CI/CD Pipeline to S3.

- How I never needed to run a Debugger once.

- How I used FAKE for x-plat build scripts.

But, I think this post has gone on too long already.

What I really wanted to highlight and show off are the parts of F# I love. Along with that, the power of the SAFE-Stack for building apps that are using the same tech stacks people are currently using, like React for UI and Jest for Testing, but show how Fable enables developers to do so much more:

- 100% re-usable code

- Type safe code

- Domain modelling using Algebraic Data Types

- Event Sourcing

- Familiarity with .NET

- Functional Architecture (Elmish).

I also wanted to share my solutions to some of the problems I’ve had, like running the tests, or setting up webpack, or using FAKE.

It doesn’t do everything that the SAFE Demo applications do, but I hope someone can find it a useful starting point for doing more than just TODO lists. Please go checkout the source, clone it and have a play.

If anyone has any questions or comments, you can find me on Twitter, or open an Issue in the Repo.

Don’t forget to have a play ;)

-

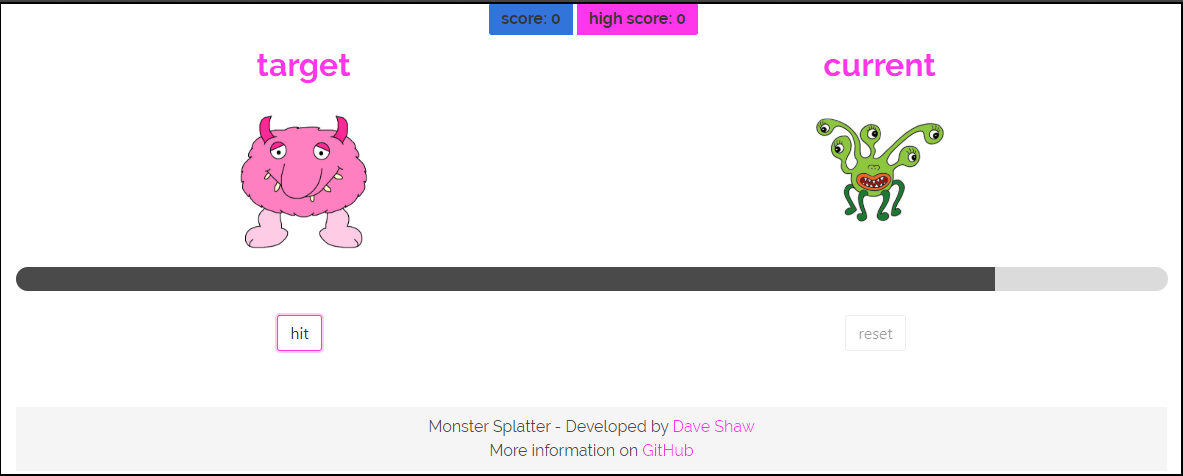

Playing with Fable and the SAFE Stack

I’ve recently started looking at Fable as way to use F# to write Web Apps.

For the past 2 years I have had a game that I wrote in TypeScript as a playground for learning more about the language. However, not been a JavaScript or a game developer I think I had some fundamental problems with the app that I never managed to escape.

Over the past few months Fable has kept appearing on my twitter stream and looked really interesting, especially as it can create React Web Apps, which is something I need to know more about.

I began by using the SAFE-Dojo from CompositionalIT as a playground to learn and found it did a real good job of introducing the different parts of the SAFE-Stack.

Using it as a reference, I managed to re-write my game in Fable in very little time.

If you want to see it in action you can have a look here. It’s quite basic and doesn’t push the boundaries in away, but it’s inspired by my Daughter, and she loves to help me add features.

Play it now / View Code

Why do I love SAFE?

There are a number of awesome features of this whole stack that I want to shout about:

Less Bugs

With the old version, I found managing state really hard, there was a persistent bug where the user could click “hit” twice on the same monster and get double points.

With Fable and Elmish, you have a really great way of managing state. Yes, it is another model-view-everything else approach. But the idea of the immutable state coming in and new state been returned is a great fit for functional programming.

You are also coding in F# which can model Domains really well meaning you are less likely to have bugs.

Less Code

I’m always surprised by how small each commit is. I might spend 30 minutes or more messing with a feature, but when I come to commit it, it’s only ever a few lines of code. Even replacing the timer for the entire game was a small change.

Fulma, or should I say Bulma

The SAFE Stack introduced me to Fulma which is a set of Fable helpers for using Bulma.

At first I struggled to get to grips with Fulma, but once I realised how it just represented the Bulma stylings, I found it much easier. Even someone as bad at UI as me, can create something that doesn’t look terrible.

I mostly kept the Bulma documentation open when styling the app as it had better examples and I could translate them to Fulma in my head.

It’s React

React is quite a big thing at the moment, and something I’m looking to use at work. Having something that is React, but isn’t pure JS is great for me. It also supports Redux, so things like the Chrome React and Redux developer tools work with it.

These are amazingly useful tools for debugging Web Apps, even ones this simple.

Conclusion

I’m going to keep looking for situations where I can use the SAFE-Stack. Next will have to be something more complicated - with multiple pages and a back-end with some persistence.

This will give me a feel if it could be something I could use everyday - I’d really like to code this way all the time.

I’m already looking to push F# at work, and this would be a great compliment.

Play it now / View Code

-

You might not be seeing exceptions from SQL Server

This post describes a problem I noticed whereby I wasn’t seeing errors from my SQL code appearing in my C#/.NET code.

I was recently debugging a problem with a stored procedure that was crashing. I figured what caused the stored procedure to crash and replicated the crash in SQL Management Studio, but calling it from the application code on my development environment didn’t throw an exception. What was even stranger was that the bug report was from an exception thrown in the C# code, I had the stack trace to prove it.

After a bit of digging through the code, I noticed a difference between my environment and production that meant I wasn’t reading all the results from the

SqlDataReader.The C# was something like this:

var reader = command.ExecuteReader(); if (someSetting) //Some boolean I didn't have set locally. { if (reader.Read()) { //reading results stuff. } reader.NextResult(); }Changing

someSettingtotruein my development environment resulted in the exception been thrown.What’s going on?

The stored procedure that was crashing looked something like this:

create procedure ThrowSecond as --Selecting something, anything select name from sys.databases raiserror (N'Oops', 16, 1); --This was a delete violating a FK, but I've kept it simple for this example.It turns out that if SQL raises an error in a result set other than the first and you don’t try and read that result set, you won’t get an exception thrown in your .NET code.

I’ll say that again, there are circumstances where SQL Server raises an error, and you will not see it thrown in your .NET Code.

Beware transactions

The worst part of this… if you are using transactions in your application code, e.g. using

TransactionScope, you will not get an exception raised, meaning nothing will stop it callingCompleteand committing the transaction, even though part of your operation failed.void Update() { using (var tx = TransactionScope()) { DeleteExisting(); //Delete some data InsertNew(); //Tries to save some data, but SQL Errors, but the exception doesn't reach .NET tx.Complete(); } }In the above hypothentical example if

InsertNew()happens to call a stored procedure like before and is using C# like in the previous examples. It will delete the existing entry, but will not insert a new entry.When does it happen?

To figure out when this does and doesn’t happen I wrote a number of tests.

Using 3 different stored procedures and 4 different ways of calling it from C#.

Stored Procedures

create procedure ThrowFirst as raiserror (N'Oops', 16, 1); select name from sys.databases go create procedure ThrowSecond as select name from sys.databases raiserror (N'Oops', 16, 1); go create procedure Works as select name from sys.databases goCSharp

void ExecuteNonQuery(SqlCommand cmd) { cmd.ExecuteNonQuery(); } void ExecuteReaderOnly(SqlCommand cmd) { using (var reader = cmd.ExecuteReader()) { } } void ExecuteReaderReadOneResultSet(SqlCommand cmd) { using (var reader = cmd.ExecuteReader()) { var names = new List<String>(); while(reader.Read()) names.Add(reader.GetString(0)); } } void ExecuteReaderLookForAnotherResultSet(SqlCommand cmd) { using (var reader = cmd.ExecuteReader()) { var names = new List<String>(); while (reader.Read()) names.Add(reader.GetString(0)); reader.NextResult(); } }Results

The results are as follows:

Test Procedure Throws Exception ExecuteNonQuery ThrowFirst ✅ ExecuteNonQuery ThrowSecond ✅ ExecuteNonQuery Works n/a ExecuteReader Only ThrowFirst ✅ ExecuteReader Only ThrowSecond ❌ ExecuteReader Only Works n/a ExecuteReader Read One ResultSet ThrowFirst ✅ ExecuteReader Read One ResultSet ThrowSecond ❌ ExecuteReader Read One ResultSet Works n/a ExecuteReader Look For Another ResultSet ThrowFirst ✅ ExecuteReader Look For Another ResultSet ThrowSecond ✅ ExecuteReader Look For Another ResultSet Works n/a Explained

The two problematic examples have a ❌ against them.

Those are when you call

ExecuteReaderwith theThrowSecondstored procedure, and don’t go near the second result set.The only times where calling

ThrowSecondwill raise an exception in the .NET code is when using either,ExecuteNonQuery()(no good if you have results) or you callreader.NextReslt()even when you only expect a single result set.XACT_ABORT

I tried setting

SET XACT_ABORT ONbut that made no difference, so I’ve left it out of the example.Conclusion

I’m not sure what my conclusion is for this. I could say, don’t write SQL like this. Perform all your data-manipulation (DML) queries first, then return the data you want. This should stop errors from the DML been a problem because they will always be prior to the result set you try and read.

However, I don’t like that. SQL Management Studio does raise the error and I wouldn’t want to advocate writing your SQL to suite how .NET works. This feels like a .NET problem, not a SQL one.

I will say don’t write stored procedures that return results, and then write C# that ignores them. That’s just wasteful.

The only other solution would be to ensure you leave an extra

reader.NextResult()after reading all of your expected result sets. This feels a little unusual too, and would probably be removed by the next developer, who could be unaware of why it is there in the first place.So in the end, I don’t know what’s the best approach, if anyone has any thoughts/comments about this, feel free to contact me on twitter.

Downloads

You can download the fully runnable examples from here:

They are LINQPad scripts that run against a LocalDB called “Dave”.

-

XMAS Pi Fun

I’ve had a few days off over XMAS, so I decided to have a play with my Raspberry Pi and the 3D XMAS Tree from ThePiHut.com.

With my (very) basic Python skills I managed to come up with a way of using a Status Board on one Pi to control the four different light settings on the XMAS Tree, running on another Pi (the “tree-berry”).

(sorry about the camera work, I just shot it on my phone on the floor)

All the source for this is on my GitHub, if you want to see it.

How it works

The “tree-berry” Pi has a Python SocketServer running on it, receiving commands from the client, another Python program running on the other Pi.

The server is very rudimentary. Each light setting was initially written as a separate python script with different characteristics on how it runs: some have

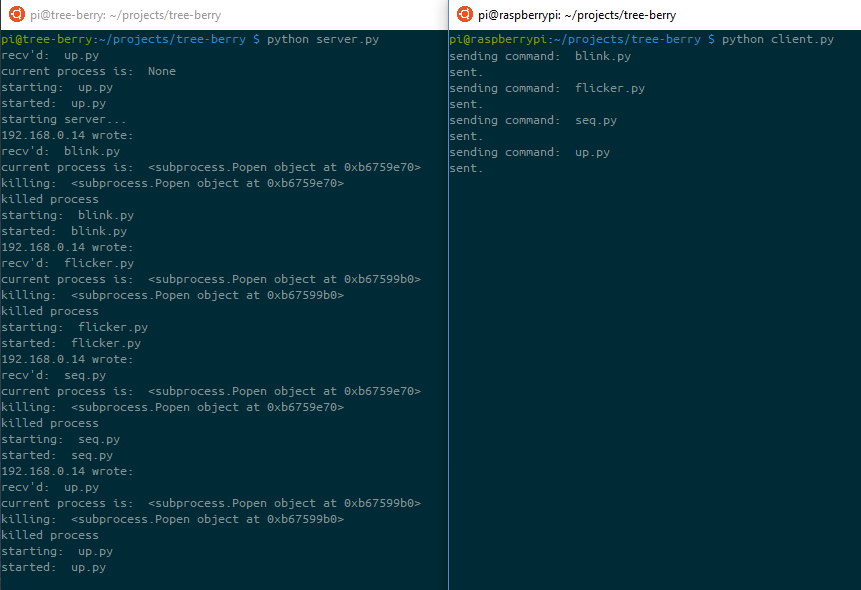

while True:loops, others just set the lights andpause. To save me from figuring out in how to “teardown” each setting and start a new one, I decided to fork a new process from the server, and then kill it before changing to the next setting. This makes it slow to change, but ensures I clean up before starting another program.The 2 consoles can be seen side by side here:

There’s a lot I need to learn about Python, but this is only for a few weeks a year ;).

subscribe via RSS